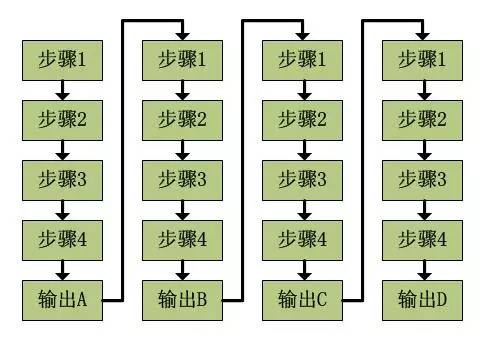

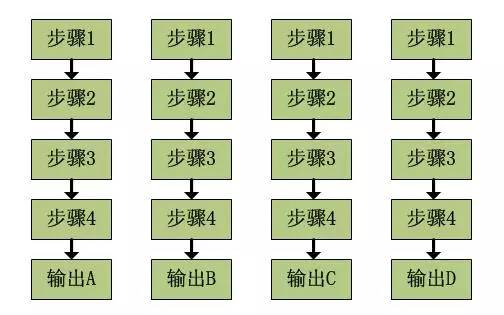

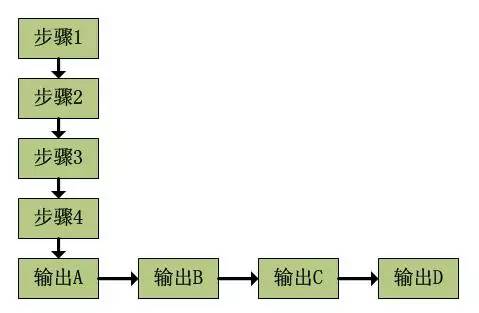

Beginners love to ask this question, my new book will tell you: logical bonding is an early task of FPGA, real-time control makes FPGAs useful, FPGAs implement various protocols with high flexibility, signal processing makes FPGAs come The higher the end, the system on chip let FPGA replace everything... However, the privilege is very entangled, and I have been asking myself "What can FPGA do?" The thing stems from the DVR project to be launched. The video is fixed. It also shows that the next step is to store, the transmission bandwidth and the storage capacity are released. "It must be compressed." Then, the picture is JPEG and the video is H.264. Although the various schemes are only preliminary understanding, we find that although there are Hibis and TI dual-core solutions similar to the SOPC concept, and they are dedicated to this, we find that this is a threshold, with the words of our manager. It is "the average person can't make it", the cost is not only the problem of money, but also the time and effort of engineers to be familiar with a new high complexity development environment. Then retreat to the next, it seems that a DSP is also difficult to get, the market is common DSP + FPGA, or there are some dedicated ASIC can be qualified for encoding such as H.264, but look at the price of the chip can only be used to die Expensive. After the compromise, I am still thinking about another DSP in the morning, but also optimistic about the blackfin of ADI, ready to slowly prepare for my DSP journey. However, perhaps the problem that has been lingering in my mind for a few days has become more and more intense. "What can FPGA do?" Obviously, if you want to make some comparisons between the general controller or the processor and the FPGA, the privilege will be quickly sent to Figure 1 and Figure 2 (Figure 3). Figure 1 General processing flow based on controller or processor Figure 2 FPGA-based parallel processing flow Figure 3 FPGA-based pipeline processing flow Obviously, the general controller or processor in Figure 1 determines that its work must be step-by-step due to the inherent sequence characteristics of the software. The four steps of one output can be completed before the next step of the next output is completed. Four outputs require 20 steps per unit time (assuming the output is also a step, and an output requires 5 steps). Although many DSPs now have a powerful hardware acceleration engine, such as DMA for simply moving data, the amount of work it does, or the amount of work performed in parallel with the software, is actually very limited. The limitation is that his flexibility is very poor, and the lack of coordination will also make the processing speed greatly reduced. In contrast, the FPGA processing of Figure 2 and Figure 3, first of all, Figure 2, the parallel processing is very good and powerful, which is four times the processing speed of the previous software. Parallelism is the biggest advantage of FPGAs, but it requires a lot of resources to change the speed. In layman's terms, it is necessary to use a lot of money for performance. I think this is not a solution that everyone can afford. Looking at Figure 3, it is a good compromise. Pipeline processing is the most classic method in FPGA and even the whole signal processing field. It is possible to complete the task with only a quarter of the resources of the parallel processing method without substantially reducing the processing speed. Then the topic goes back to the compression of JPEG and H.264. In fact, the FPGA is competent enough to find a solution such as a basket on the network. In fact, in terms of 10,000 steps, the algorithm is complicated and the real-time requirements are higher. The FPGA is sufficient, especially in the pipeline method. Maybe the first data output takes a long time (the general system is licensed). But this does not prevent the real-time output of subsequent data. I think this is the best compromise between cost (device resources) and performance. Then, these complex algorithms are nothing more than storage and operations. The storage in real-time processing is largely dependent on the on-chip memory resources of the device. The expanded memory will only reduce the processing performance in terms of complexity and speed. Addition, subtraction, multiplication and division are easy to handle, embedded multipliers or a variety of dedicated DSP processing units can be fixed; but the open-ended power and other helpless operations can only be solved by looking up the table. So in this way, in fact, FPGA is to do this - the algorithm, the more you can't solve the problem, I have nothing to say. Having said that, I found something wrong. Someone wants to make a brick. In fact, there is really no work that FPGAs can't do, but FPGAs are not suitable for doing work. Personally think that those with strong order, such as the file system, are very tossing, even if the management of the simple SD card file system is not Stopping the toss, the data read here, the FPGA code is difficult to write, a large state machine may be able to solve the problem, but it is easy for the designer to get into it.

We are a professional manufacture of 166Mm Solar Cells, Solar Cells Efficiency, Solar Cells Battery, Mono Solar Cell 166Mm, choose the high quality solar cells, we can custom the solar module as your requests. With different power from 380W to 550W as the clients requests.If you have any request,please let us know.

166Mm Solar Cells,Solar Cells Efficiency,Solar Cells, Mono Solar Cell 166Mm, 166mm solar cell ce Jiangxi Huayang New Energy Co.,Ltd , https://www.huayangenergy.com