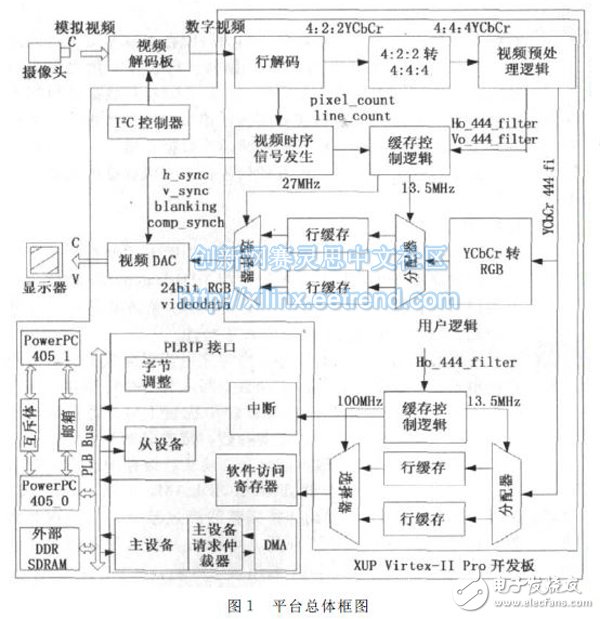

In order to collect, process and display video in real time, a real-time video processing platform based on dual PowerPC hard core architecture is designed and implemented. The video preprocessing algorithm is implemented in hardware and added to the hardware system in the form of user IP core. The upper layer video processing software program directly calls the preprocessed image data from the memory; the hardware system for constructing the dual PowerPC hard core architecture on the FPGA is mainly introduced; the ping-pong control algorithm is used to buffer one line of image data; the DMA method is used The image data is stored in the memory; edge detection is taken as an example of the video preprocessing algorithm and implemented on the platform. The experimental results show that it takes only 40ms to realize the platform. The platform can process video in real time and has high practical value. Currently, video processing platforms mostly use DSP chips for image processing. Real-time video processing places extremely high demands on system performance, and almost the simplest functions outweigh the processing power of a single general-purpose DSP chip. Once the limit is reached, usually only additional DSP chips are added. In 2010, Guo Chunhui proposed a parallel video processing system based on multiple strips and multiple DSPs [1]. This method of multi-processor parallel processing increases the processing speed, but increases the complexity of system development, such as reasonable allocation of tasks, communication between multiple processors, mutual exclusion, and the like. Of course, it also increases the power consumption of the system. The limitations of DSP in terms of performance have led to the development of more specialized chips, such as multimedia processors, to overcome these problems. However, it turns out that these devices have inflexibility disadvantages in some applications with extremely narrow ranges, and there are performance bottlenecks. Processor-based solutions are particularly limited in high-resolution video processing systems such as HDTV and medical imaging systems. Basically, this scheme is limited by how many cycles to complete an addition and multiplication operation. Video processing using FPGAs allows designers to implement video signal processing algorithms using parallel processing techniques. Designers can also trade off between design area and speed, and can achieve a given function with a much lower clock frequency than DSP. More important is its flexibility to meet firmware upgrades and future multimedia standards improvements. Therefore, this paper proposes a real-time video processing platform based on FPGA reconfigurable software and hardware collaborative design. The platform uses conventional FPGA development tools to provide a robust, modular architecture that meets the requirements of high performance and low power consumption. Video is a continuously changing image information. Generally, video processing can be roughly divided into low-level processing (ie, pre-processing) and upper-layer processing: the amount of data processed by the underlying layer is large, the algorithm implementation is relatively simple, and there is large parallelism; the algorithm of the upper layer processing is complex, and the amount of data is small. . For the implementation of video processing, in the pre-processing stage of video, using software implementation is a very time-consuming process, but using hardware implementation can process a large amount of video data in parallel, which can greatly improve the speed of video processing; In the video upper layer processing stage, software implementation has a higher cost performance. For example, Visicom found that for median filtering, the DSP needed 67 cycles to complete the algorithm. Using an FPGA only requires running at a 25MHz clock frequency because the FPGA can implement this function in parallel. To achieve the same performance, the DSP needs to run at a clock frequency exceeding 1.5 GHz. In this particular application, the FPGA solution is about 17 times stronger than a 100MHz clock frequency DSP [2]. A wide range of real-time image and video pre-processing functions are available in FPGA hardware, including real-time: edge detection, scaling, color and chromatic aberration correction, shadow enhancement, image placement, histogram function, sharpening, median filtering, fuzzy analysis Wait. The real-time video processing platform of this design uses the Xilinx University Program XUP Virtex-II Pro development board. It includes a Virtex-II Pro XC2VP30 FPGA with 30,816 logic cells, 136 18-bit multipliers, 2448kB block RAM, and two PowerPC 405 processors. Also included is a DDR SDRAM DIMM slot that supports up to 2Gbytes of RAM, several expansion interfaces and an XSGA video interface. An external video decoder board (supporting the ITU-R BT.656 video standard), which can capture, process and display video. The overall block diagram of the real-time video processing platform is shown in Figure 1. The video capture part includes line decoding, 4:2:2 to 4:4:4, line buffer and cache control logic modules, allocator, selector module, and so on. The test part includes YCbCr to RGB, video timing signal generation module and the like. These two parts are the verification designs provided by Xilinx, so this article will only give a brief introduction. The pre-processing section includes a video pre-processing logic module, which will be highlighted below. These three parts constitute the user logic. The user logic and PLB IP interface form a complete PLB-based user IP core that can be easily added to the hardware system of the video processing platform. Fiber Optic Splice Closure,Fiber Optic Splice Case,Fiber Splice Closures,Outdoor Fiber Optic Splice Closure Cixi Dani Plastic Products Co.,Ltd , https://www.dani-fiber-optic.com